Key Takeaways

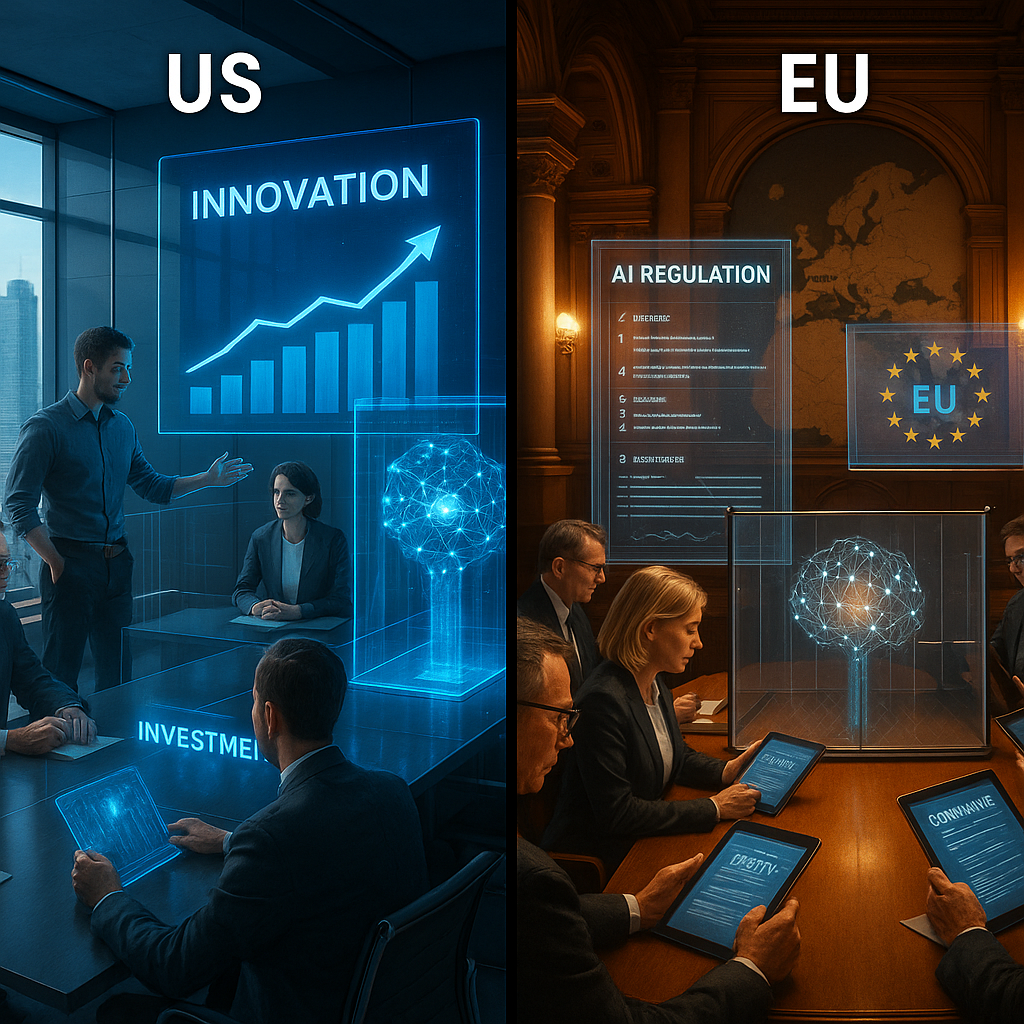

- Top story: The US pursues AI deregulation while Europe adopts stricter policies, revealing divergent visions for managing AI’s social consequences.

- Big Tech accelerates investment in advanced AI systems, setting new historical records and intensifying the innovation arms race.

- Geoff Hinton states that the next stage of AI depends on its capacity to replace human labor, raising complex questions about work, worth, and adaptation.

- China proposes a World AI Cooperation Organization, seeking to internationalize standards and address the AI society impact across borders.

- Debates on AI oversight now contrast accelerationist narratives with calls for precaution, as both sides invoke humanity’s best interests.

- A growing number of stakeholders recognize that regulation shapes not only profit, but also collective definitions of agency, responsibility, and possibility.

Below, the full context and cross-cultural debates shaping our intelligent future.

Introduction

On 2 November 2025, the US pivoted toward AI deregulation as Europe reinforced its regulatory approach, exposing sharply divergent philosophies on managing the AI society impact. At the same time, Big Tech surged ahead with unprecedented investments. China called for a World AI Cooperation Organization, underscoring urgent debate around control, labor, and the collective ethics of intelligent machines.

Top Story: US and EU Diverge on AI Regulation

The United States announced a significant reduction in proposed AI oversight mechanisms yesterday, prioritizing innovation and market competition over strict guardrails. This deregulatory approach stands in clear contrast to the European Union, which has finalized additional compliance requirements for generative AI systems under its AI Act framework.

This transatlantic divergence reflects fundamentally different philosophical approaches to emerging technologies. US officials emphasized the importance of “permissionless innovation” for maintaining technological leadership. Meanwhile, EU regulators defended a precautionary stance as essential to protect fundamental rights and ensure trustworthy AI development.

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

Industry responses reflect these differences. Major Silicon Valley firms described the US approach as “forward-thinking” and “balanced.” In contrast, European AI startups expressed concerns about competitive disadvantages, while civil society organizations in both regions warned that less regulation could introduce serious gaps.

Beyond business interests, analysts note the implications extend into global AI governance. Some suggest this divergence could lead to “regulatory arbitrage,” where companies base operations on regulatory environments rather than technical or market considerations.

Legislative Timelines

The US plan for deregulation will be phased in over six months. There will be immediate relief for research applications, followed by a gradual easing of restrictions on commercial deployments. Administration officials stated the first executive orders for these changes will be signed next week.

European regulators have set a deadline of 15 December 2025 for companies to comply with new requirements for high-risk AI systems. These include enhanced documentation, testing, and transparency measures targeting generative models capable of producing human-like content.

Congressional hearings on the US approach are scheduled for mid-November. Several key lawmakers have already indicated that legislative interventions may follow if deregulation proves too broad. Industry stakeholders have until 30 November 2025 to submit formal comments on the proposed changes.

Also Today: AI Society Impact

Model Capabilities Controversy

Leaked benchmarks indicate that leading proprietary AI systems have reached capabilities beyond what their developers have publicly disclosed. Independent researchers analyzing the data report that these models demonstrate reasoning skills close to human expert levels in specialized domains.

This has raised significant concerns about transparency in AI development. Several ethicists have criticized the practice of “capability sandbagging” (deliberately obscuring model capabilities), stating it undermines meaningful public oversight and complicates regulatory efforts.

The controversy highlights the growing gap between public discourse on AI limitations and rapid advancements occurring out of sight. As one AI safety researcher remarked, policy decisions often rely on company disclosures, not on what the systems actually achieve.

AI alignment drift is increasingly cited by experts as an unseen risk: as models outpace documentation and oversight, subtle ethical deviations can go undetected despite formal compliance.

Workforce Transformation Data

Recent labor market data shows that AI-driven workforce displacement in knowledge sectors is occurring faster than predicted. An industry survey released this week documents marked reductions in entry-level positions in fields such as law, journalism, graphic design, and software development.

At the same time, demand for AI systems management and oversight roles has increased by 340% year-over-year, leading to a stark labor market split. Mid-career professionals with both domain expertise and AI skills command premium compensation, while opportunities for newcomers without credentials are shrinking.

Education systems are responding by overhauling curricula to emphasize skills for human-AI collaboration, critical thinking, and problem-solving. These are abilities that remain distinctly human despite advancing AI tools.

This trend underscores the complex dynamic between human limitations and rapid technological change: the value of intuition, creativity, and strategic oversight as AI systems automate technical knowledge tasks.

Investment Patterns Shift

Venture capital funding for AI startups has pivoted toward deployment and integration technologies rather than foundational model research. Data from the last quarter shows $7.3 billion invested in companies building AI implementation tools across sectors, against $1.8 billion for fundamental research.

This shift suggests that value creation now focuses on applying proven AI capabilities to specific domains, rather than pursuing larger models. Healthcare led the field with $2.1 billion in new funding, followed by financial services at $1.4 billion and industrial sectors at $1.2 billion.

Despite regulatory divergence, European startups specializing in “compliance-ready AI” raised $940 million this quarter. This points to a distinct European AI ecosystem centered around responsible innovation.

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

EU AI Act compliance has become a key differentiator for startups, driving a surge of investment in companies offering documentation, transparency, and risk management tools tailored to European regulatory frameworks.

Also Today: Governance Frameworks

Public Opinion Research

A new global survey published today highlights marked differences in public attitudes toward AI governance. Polling over 32,000 respondents from 28 countries, the research found that 74% of Europeans favor strict preventive regulation, compared to 43% of Americans.

Analysis attributes these differences to distinct cultural perceptions of technological risk. European respondents consistently prioritized “protection from potential harms,” while Americans emphasized “continued innovation” and “economic competitiveness.”

Attitudes also vary by age. Respondents under 30 demonstrate greater comfort with AI systems, yet show stronger support for mandatory transparency measures across all regions. This may suggest an emerging generational consensus on governance priorities beyond cultural variation.

Multi-Stakeholder Initiatives

Three major international AI governance collaborations expanded activities yesterday. The Global AI Governance Forum introduced a new framework for cross-border AI incident reporting, while the Responsible AI Consortium admitted 17 organizations to its trustworthy systems certification program.

The United Nations AI Advisory Body released its first comprehensive policy recommendations, emphasizing international coordination mechanisms to manage advanced capabilities. The document warns against “regulatory fragmentation” and proposes shared minimum standards for high-risk applications.

These efforts embody an emerging third path in AI governance: principles-based collaboration that sets shared norms without rigid compliance. However, critics remain skeptical of their effectiveness without binding enforcement mechanisms.

A number of experts have drawn parallels to the debates surrounding AI origin philosophy—questioning whether governance should treat intelligence as an emergent discovery shaped by collective norms, or as a technology to be centrally managed and controlled.

What to Watch: Key Dates and Events

- World Economic Forum AI Governance Summit: 12–14 November 2025, Geneva

- US Congressional Hearings on AI Regulatory Framework: 17–18 November 2025, Washington DC

- European Commission AI Compliance Workshop for Industry: 20 November 2025, Brussels

- G20 Digital Economy Working Group Meeting on AI Coordination: 3–4 December 2025, Jakarta

- Global AI Safety Research Conference: 8–10 December 2025, Singapore

Conclusion

The divide between US AI deregulation and tighter EU controls marks a decisive moment for global technology policy. It is reshaping the AI society impact across borders and sectors. These shifts influence investment flows, public perceptions, and the development of new governance frameworks, underscoring the complexity of balancing innovation with ethical oversight.

What to watch: Congressional hearings, compliance deadlines, and international AI governance summits scheduled through November and December.

For further exploration of the relationship between regulation, digital rights, and collective definitions of possibility, see Algorithmic Ethics and Governance.

Leave a Reply