Key Takeaways

- Risk-driven classification sets the compliance agenda. The EU AI Act employs a tiered approach, prohibiting certain AI applications, mandating rigorous rules for high-risk systems, and maintaining minimal oversight for low-risk AI. Organizations must accurately identify and categorize their systems to ensure proper compliance.

- Stringent obligations for high-risk AI demand proactive adaptation. High-risk systems are subject to comprehensive impact assessments, transparency requirements, thorough audit trails, and robust AI management processes aligned with standards such as ISO 42001. Organizations must elevate oversight to meet these heightened demands.

- Governance frameworks become operational imperatives. Implementing effective AI governance is now essential rather than optional. Systematic risk assessments, meticulous documentation, and continuous monitoring are required to remain resilient as regulations evolve.

- Implementation timeline requires immediate action. With phased enforcement deadlines approaching, organizations need to rapidly map their AI deployments, identify risk categories, and prepare documentation well in advance of key compliance milestones.

- Non-compliance carries steep penalties and reputational risk. Failure to comply with the EU AI Act may result in substantial fines and heightened public scrutiny. These consequences highlight the need for transparent, accountable AI development from the earliest stages.

- Bridging ancient methods with modern regulation. Much like interpreting prehistoric art calls for interdisciplinary understanding, organizations must adopt a holistic, cross-functional approach to decode risk and achieve ethical, legally compliant AI in today’s rapidly changing environment.

By demystifying the EU AI Act’s structure and surfacing actionable compliance strategies, this overview serves as a practical roadmap through regulatory complexity. It arms your organization to navigate the shifting landscape of AI with confidence, integrity, and accountability.

Introduction

Europe has made a bold statement on digital governance with the sweeping reach of the EU AI Act. This landmark legislation is more than a matter of legal compliance. It draws clear boundaries between acceptable innovation and intolerable risk, compelling organizations to rethink every aspect of how they build, deploy, and manage AI systems across all industries.

Grasping the intricacies of AI regulation is now non-negotiable for any entity operating within or partnering with the EU. The Act’s risk-driven framework calls for granular system classification, meticulous compliance for high-risk AI, and comprehensive documentation. These requirements are set to impact not just technical processes but also entire organizational governance strategies. As enforcement deadlines draw near and penalties for lapses become significant, the need for urgent, proactive responses has never been greater. Companies must quickly assess risks, establish forward-looking oversight, and align operations with legal and ethical expectations in an era where public trust and regulatory scrutiny are intertwined.

Let’s unravel what the EU AI Act really means for your business, explore the critical steps for timely compliance, and examine how to construct an AI governance framework robust enough for Europe’s evolving regulatory future.

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

Understanding the EU AI Act Framework

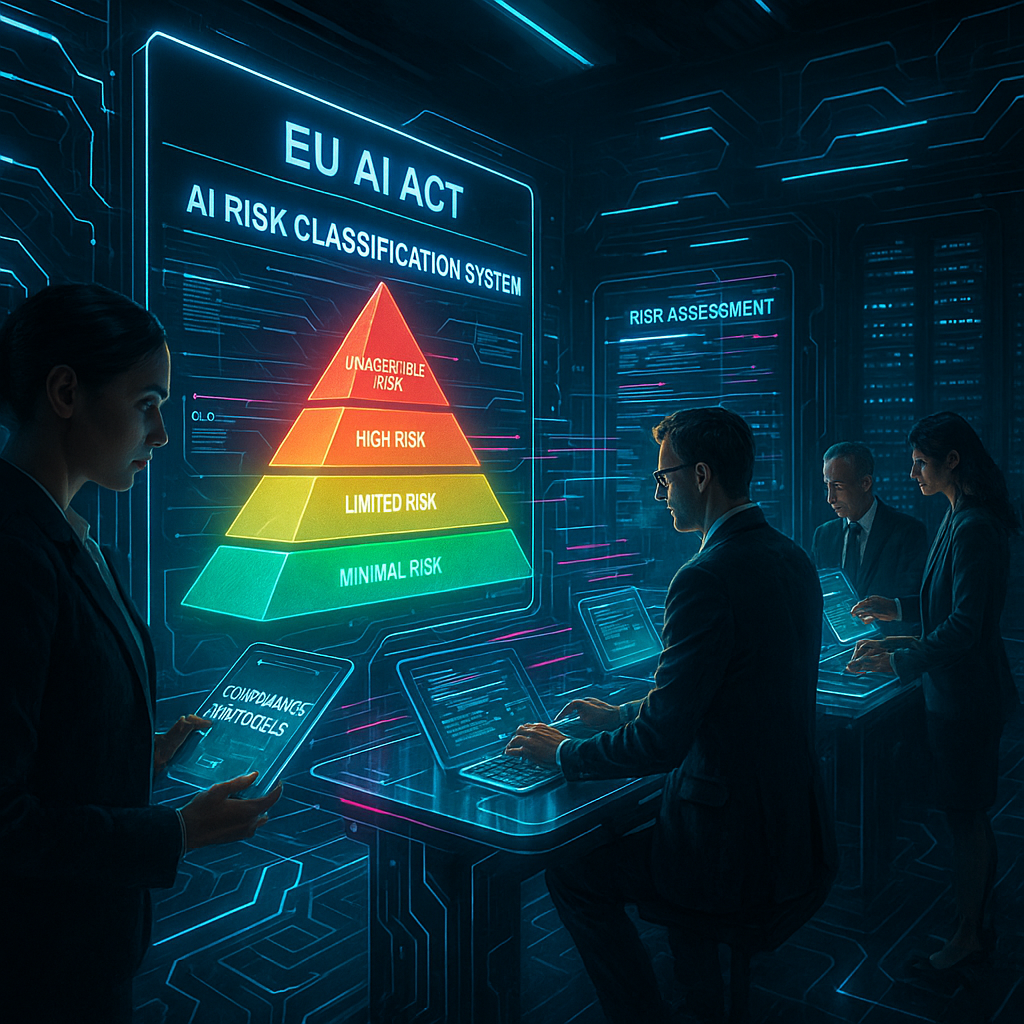

The EU AI Act stands as a comprehensive blueprint for responsible artificial intelligence across Europe. At its center is a risk-based methodology, dividing AI systems by the degree of potential harm and defining corresponding regulatory requirements for each category.

Risk-Level Classifications

The EU AI Act segments AI applications into four pivotal risk categories:

-

Unacceptable Risk

-

Systems that pose clear threats to fundamental rights or public safety, such as social scoring mechanisms, manipulative AI targeting vulnerable groups, and real-time biometric identification in public spaces.

-

These applications are categorically banned, aside from narrowly defined exceptions for law enforcement or national security.

-

High Risk

-

Systems deployed in areas critical to society, including the operation of vital infrastructure (like energy or transport), educational assessment, employment and workforce management, access to essential services (healthcare, banking), law enforcement, migration, and border management.

-

These applications face the most stringent regulatory scrutiny, reflecting their heightened potential impact.

-

Limited Risk

-

Examples include customer-facing chatbots, emotion-recognition systems, and biometric categorization tools.

-

Such systems demand specific transparency commitments, such as clear disclosure when interacting with automated systems.

-

Minimal Risk

-

Everyday AI applications like basic video games, spam filters, and standard inventory management software.

-

These systems can operate largely within existing best practices and require only standard regulatory compliance.

By setting up clear lines between these categories, the Act requires organizations to embark on thorough assessments of their AI portfolios. This clarity not only streamlines compliance efforts but also supports a culture of responsible innovation across industries.

Industry Implications

The tiered risk approach impacts a diverse range of industries. In healthcare, AI-powered diagnostic tools and patient triage systems may fall under high-risk requirements, demanding rigorous testing and transparency. The finance sector faces robust obligations for systems driving loan approvals or fraud detection. In education, automated examination grading or adaptive learning platforms must meet demonstrable fairness and explainability standards. Even retail and marketing see effects, as AI-driven customer behavior analysis or dynamic pricing tools are classified and managed according to risk. By extending beyond the tech sector, the EU AI Act prompts organizations in every field to scrutinize how AI touches their operations and to take appropriate action.

Compliance Requirements for High-Risk AI Systems

High-risk AI systems are a central focus of the EU AI Act. These technologies are entrusted with processes and decisions that shape real-world outcomes for people, critical infrastructure, and societal stability. As a result, their regulatory obligations are both detailed and uncompromising.

Essential Documentation Requirements

Compliance for high-risk systems hinges on rigorous documentation and operational transparency, including the following components:

-

Risk Management System

-

Organizations must systematically identify and assess risks throughout the AI lifecycle.

-

This process includes analyzing both the probability and possible severity of harms, implementing mitigation strategies, and maintaining ongoing risk evaluations.

-

Data Governance

-

Quality assurance processes ensure training data is reliable, representative, and free from bias.

-

Protocols must document methods for minimizing data-driven discrimination and safeguarding data privacy.

-

Security measures must be formally captured and subject to continuous oversight.

-

Technical Documentation

-

Comprehensive documentation details the AI system’s architecture, functional specifications, and development methodologies.

-

These records include testing procedures, validation protocols, performance metrics, and versions of deployed models.

Organizations must retain these materials for audit purposes, proving both initial compliance and ongoing diligence.

Ongoing Monitoring and Assessment

No high-risk AI deployment can be considered “set and forget.” The Act mandates:

- Continuous monitoring of system performance

- Documentation and remediation of incidents or deviations from expected behavior

- Updates to the system and re-assessment of associated risks or impacts

- Compliance assessment with fundamental rights, including fairness and non-discrimination

- Evaluation of potential environmental impacts associated with large-scale AI operations

This ongoing oversight is essential for adapting to emerging risks and regulatory updates.

Cross-Industry Considerations

Imagine AI solutions in transportation. Autonomous vehicle controllers must capture every safety test and regulatory check. In healthcare, algorithmic triage tools require comprehensive records not only to demonstrate efficacy, but to swiftly identify bias or emerging patient safety concerns. Within the legal sector, contract automation systems must maintain transparent audit trails to ensure clients and regulators can assess how critical decisions are made. In marketing or retail, such documentation helps organizations prove compliance and defend responsible practices, especially if customer trust is ever challenged.

Implementation Timeline and Key Deadlines

Navigating the EU AI Act’s requirements means aligning organizational timelines with regulatory deadlines. Strategic planning is key to ensuring both a smooth transition and sustained compliance.

Critical Compliance Phases

-

Phase 1: Initial Assessment (2024)

-

Inventory all current and planned AI applications across business functions.

-

Identify the risk level of each system according to the Act’s categories.

-

Conduct a detailed gap analysis between existing practices and regulatory expectations.

-

Map out necessary resources and responsibilities.

-

Phase 2: Documentation Development (2024-2025)

-

Prepare technical documentation, assemble risk and data governance frameworks, and develop incident monitoring and reporting procedures.

-

Initiate organization-wide training with an emphasis on compliance protocols.

-

Phase 3: Implementation (2025-2026)

-

Deploy frameworks and monitoring systems at scale.

-

Conduct trial audits and initial risk assessments.

-

Establish robust mechanisms for reporting, incident response, and compliance demonstration.

Specific deadlines vary by classification and organizational role, with high-risk applications typically facing stricter timelines. However, all organizations are advised to proceed without delay, as proactive preparation is far less costly than last-minute overhauls or crisis-driven remediation.

Cross-Functional Approaches to Compliance

Meeting the expectations of the EU AI Act requires a symphony of expertise across your organization. No single department can shoulder the responsibility alone. AI regulation is as cultural as it is technical.

Key Organizational Functions

-

Legal and Compliance Teams

-

Interpret evolving regulations, determine documentation protocols, and coordinate compliance monitoring. They build the frameworks that withstand both legal scrutiny and ethical challenge.

-

Technical Teams

-

Analyze architectures, monitor performance, document coding standards, and test for transparency and fairness. Their expertise ensures that systems not only function, but meet the highest compliance standards.

-

Business Operations

-

Assess the impact of compliance on day-to-day activities, align resources, adapt processes, and coordinate training. Operational resilience depends on business units embedding compliance into their culture.

-

Ethics and Governance

-

Develop ethical principles and responsible innovation frameworks. They foster engagement with stakeholders, monitor for bias or unintended consequences, and create a feedback loop for evolving AI practices.

This cross-functional synthesis is essential for holistic compliance. In fields like environmental science, sustainability teams work with compliance and data scientists to ensure climate modeling tools meet both regulatory and societal norms. Educational institutions involve curriculum directors, IT specialists, and ethics boards to build resilient, transparent AI-powered learning platforms.

Conclusion

The EU AI Act redefines the boundaries of artificial intelligence, demonstrating that true progress is measured not by unbridled innovation, but by the ethical stewardship and accountability that accompany new technologies. Its risk-based framework provides structure without stifling ambition, shielding society from avoidable harms while enabling responsible AI-driven growth across sectors as diverse as healthcare, finance, education, and beyond.

Thriving under this new regime is not simply a matter of checking regulatory boxes. Instead, it compels organizations to dismantle silos, weaving together legal, technical, operational, and ethical threads to create robust, future-proof AI governance. The Act is more than a regulatory hurdle. It marks a paradigm shift, challenging us to define the boundaries of power, control, and trust in a world shaped by “alien minds.”

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

Looking forward, the organizations that will lead are those that internalize these principles early, translating them into tangible practices and cultural norms. As the legal, social, and technological fabric continues to weave itself in unexpected patterns, the EU AI Act offers not just a mandate, but a lever for shaping a future where intelligence (artificial and human) coexist with mutual respect and accountability. The decisive question is not if your organization will comply, but how effectively and imaginatively it will leverage this framework to gain insight, build trust, and foster enduring innovation in a changing world.

Leave a Reply