Key Takeaways

- Top story: Trump’s executive order challenges AI regulatory approaches in 38 states, aiming to consolidate governance at the federal level.

- Adaptive Security secures $81 million in funding to strengthen defenses against AI-powered deepfakes, reflecting growing concerns over digital authenticity.

- NIST issues draft guidelines for integrating AI into cybersecurity, inviting public commentary on national standards for safe deployment.

- Brain-inspired chips promise transformative reductions in the energy demands of large-scale AI applications by modeling computation on human cognition.

- The balance between centralization and innovation raises fresh questions about how AI’s societal footprint will be managed, and by whom.

Below, the full context and diverse perspectives on AI’s evolving societal impact.

Introduction

On 17 December 2025, the AI society impact press review highlights significant developments as a new Trump executive order confronts a patchwork of state-level AI regulations across 38 states. The order seeks to centralize control and clarify authority. Meanwhile, NIST’s draft guidelines on AI cybersecurity integration signal an intensifying debate over how power, innovation, and trust will be balanced in a rapidly shifting technological landscape.

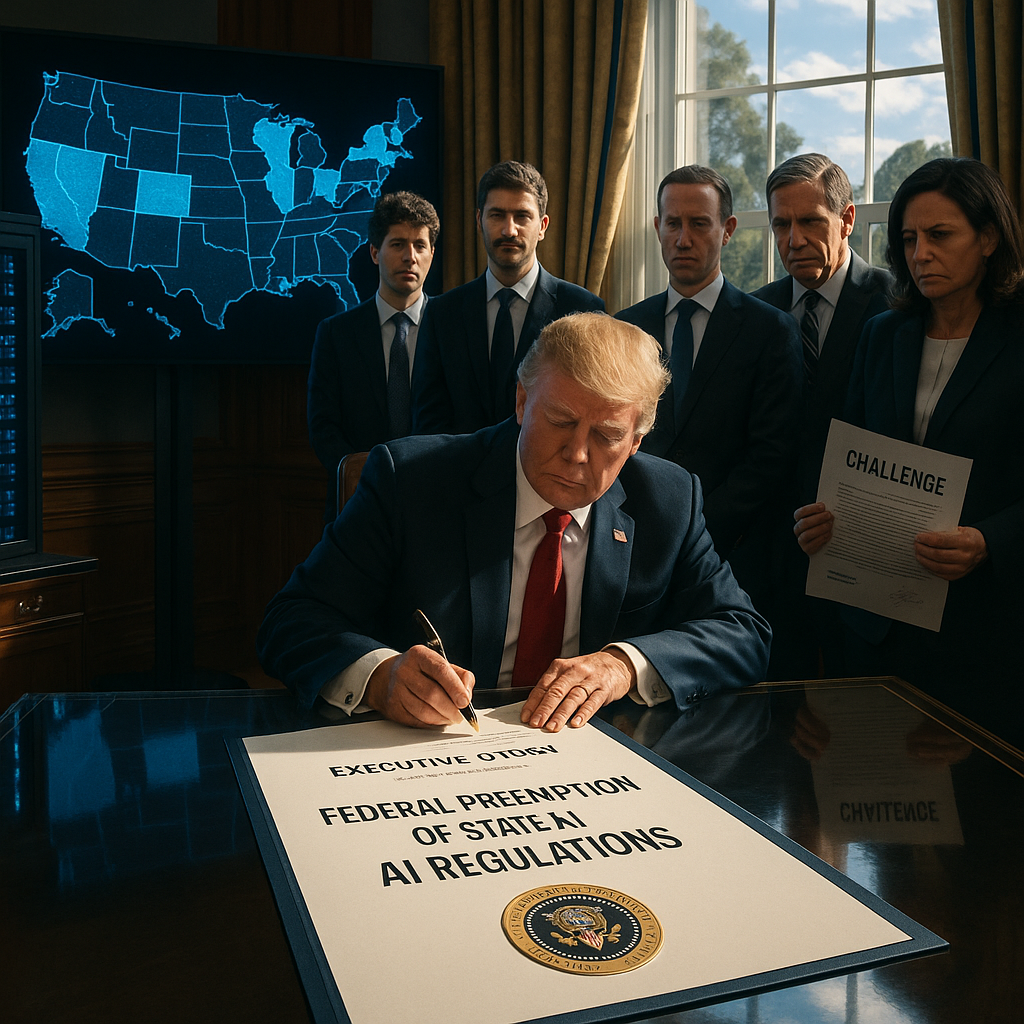

Top Story: Trump Orders Federal Preemption of State AI Regulations

President Trump signed an executive order on 16 December 2025 establishing federal preemption over state-level artificial intelligence regulations. He stated that a “patchwork of inconsistent rules” threatens America’s technological leadership. The order directs federal agencies to develop unified regulatory frameworks set to supersede existing state AI laws in California, New York, and Colorado.

The executive order targets state regulations on AI transparency requirements, data privacy protections, and mandatory impact assessments that technology companies have criticized as burdensome. Trump remarked during the White House signing ceremony, joined by technology executives, that “innovation cannot thrive under 50 different rulebooks.”

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

Industry leaders, including OpenAI CEO Sam Altman and Meta’s Mark Zuckerberg, expressed support for the move, describing it as essential for maintaining American competitiveness against China. Altman called it “a crucial step toward ensuring AI development isn’t strangled by regulatory complexity,” while also emphasizing the need for responsible guardrails.

In response, civil liberties groups and state attorneys general from California and New York announced immediate legal challenges. The Electronic Frontier Foundation warned that federal preemption “could eliminate vital protections that states have pioneered.” This development highlights ongoing tensions between efficiency and democratic experimentation.

Also Today: AI Governance Models

UK’s Hybrid Regulatory Approach Gains Traction

The United Kingdom’s “principles-based” AI governance framework is drawing international attention as a potential middle path between the European Union’s stringent AI Act and the historically more permissive approach of the United States. The UK model centers on adaptable guidelines enforced by existing regulatory bodies rather than a dedicated AI authority.

International observers note that this strategy allows for considerable industry self-regulation while maintaining governmental oversight. Japan and Canada have shown interest in similar frameworks, suggesting a governance philosophy that aims to balance innovation with accountability.

Tech policy experts highlight that these regulatory models reflect broader societal values. Oxford University’s Professor Martha Reynolds stated that “the UK model represents a pragmatic compromise between European precaution and American permissiveness” in analysis published on 16 December 2025.

Open Source AI Movements Challenge Corporate Control

The Responsible AI Collective, a global network of researchers and developers, released “Prometheus,” an open-source large language model, on 16 December 2025. This model, operating under community governance, rivals commercial systems and distinguishes itself with transparent code and training methodology.

The release shows a growing philosophical divide between centralized corporate AI development and distributed governance models. Prometheus’s licensing prohibits use in surveillance, weapons systems, or applications lacking human oversight, embedding ethical constraints directly into the technology.

More than 3,000 AI researchers have signed the collective’s “Distributed AI Governance Manifesto,” which argues that “the future of artificial intelligence is too important to be determined solely by profit-seeking entities or single governments.” This position challenges both corporate AI monopolies and nationalist approaches to AI development.

Also Today: Ethics and Implementation

Algorithm Transparency Act Passes Senate Committee

The Senate Commerce Committee approved the Algorithm Transparency Act on 16 December 2025 with a bipartisan vote of 19-4. The legislation would require companies deploying high-risk AI systems to conduct and publish impact assessments before implementation and maintain human oversight over critical decisions.

Senator Maria Cantwell, co-sponsor of the bill, emphasized that “transparency isn’t anti-innovation—it builds the trust necessary for broad AI adoption.” The bill exempts smaller companies and research institutions, focusing regulatory requirements on systems with potential for significant social impact.

Industry responses are mixed. IBM and Microsoft express support for the measure, while Google and Amazon cite concerns about compliance costs and the potential for disclosure of proprietary information. The challenge of balancing innovation with accountability remains central to legislative debate.

ethical drift in AI systems is a growing point of discussion as more complex legislation is drafted.

Medicine’s AI Integration Reaches Critical Milestone

The FDA granted approval on 16 December 2025 for the first fully autonomous AI diagnostic system for stroke detection. This marks a major milestone in clinical AI implementation. The system, developed by NeuroVision AI, can independently analyze brain scans and initiate emergency protocols without human physician review during critical moments.

This approval signals a notable shift in the FDA’s stance on AI medical devices, which previously required “human in the loop” oversight. Dr. Janet Rivera of the Mayo Clinic described the decision as “a profound shift in our relationship with artificial intelligence in healthcare—from tool to colleague.”

Following the announcement, ethical questions regarding liability, patient consent, and medical authority have intensified. The American Medical Association acknowledged the system’s life-saving potential while emphasizing the need for careful monitoring of outcomes and clear frameworks for responsibility when algorithms make mistakes.

For further insights on the implications and challenges of these developments, see the analysis on AI medical diagnostic technology.

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

Market Wrap: Tech Stocks React

Wall Street responded positively to Trump’s executive order. The tech-heavy Nasdaq Composite rose 2.3% by the close on 16 December 2025. AI-focused companies recorded the largest gains, with Nvidia up 4.7% and Anthropic’s recent IPO shares increasing by 6.2%.

The “AI Infrastructure Index,” tracking companies providing computing resources and foundation models, reached an all-time high. Investors interpreted federal preemption as likely to accelerate AI deployment. Smaller AI startups also advanced on expectations of simplified compliance requirements.

Traditional technology firms with significant AI investments, including Microsoft, Google, and Amazon, all gained more than 3%. Meanwhile, cybersecurity firms focused on state compliance solutions saw share prices fall, with California-based PrivacyGuard Technologies down 8.9%.

To understand the wider implications of new regulatory and compliance standards, explore the EU AI Act’s compliance and risk framework.

What to Watch: Key Dates and Events

- NIST comment period on AI Risk Management Framework 2.0 closes 20 December 2025

- Congressional hearings on federal AI preemption scheduled for 12 January 2026

- Implementation guidance for Trump’s executive order expected by 15 February 2026

- EU-US AI Governance Summit in Brussels, 28-29 January 2026

- Quarterly earnings calls for major AI companies (Anthropic: 15 January; OpenAI: 21 January; Nvidia: 3 February)

These evolving governance models echo developments in AI origin philosophy and foundational debates.

Conclusion

Trump’s executive order to centralize AI regulation marks a pivotal realignment in the ongoing debate over innovation, oversight, and the foundations of AI’s societal impact. With federal preemption set to override decades of state experimentation, the tension between national efficiency and local autonomy is escalating. What to watch: upcoming congressional hearings, the NIST framework comment deadline, and new guidance on the order’s rollout in early 2026.

For deeper context on how governance frameworks shape the future of artificial intelligence, see digital rights and algorithmic ethics in governance.

Leave a Reply