Key Takeaways

- Lawmakers target AI in HR: The bill would restrict employers from using fully automated AI systems to make employment decisions such as hiring, firing, or promotion.

- Human accountability emphasized: Companies must ensure a qualified person reviews and approves significant workplace choices made by AI, reinforcing the importance of human judgment.

- Transparency requirements envisioned: Employers using AI tools would be required to inform job applicants and employees when such systems are involved in decision-making.

- Algorithmic bias under scrutiny: The legislation addresses concerns about discrimination embedded in AI models, particularly against marginalized groups in the workforce.

- Next steps: The bill faces committee debate before potential votes in the House and Senate, possibly setting a global precedent for AI governance.

Introduction

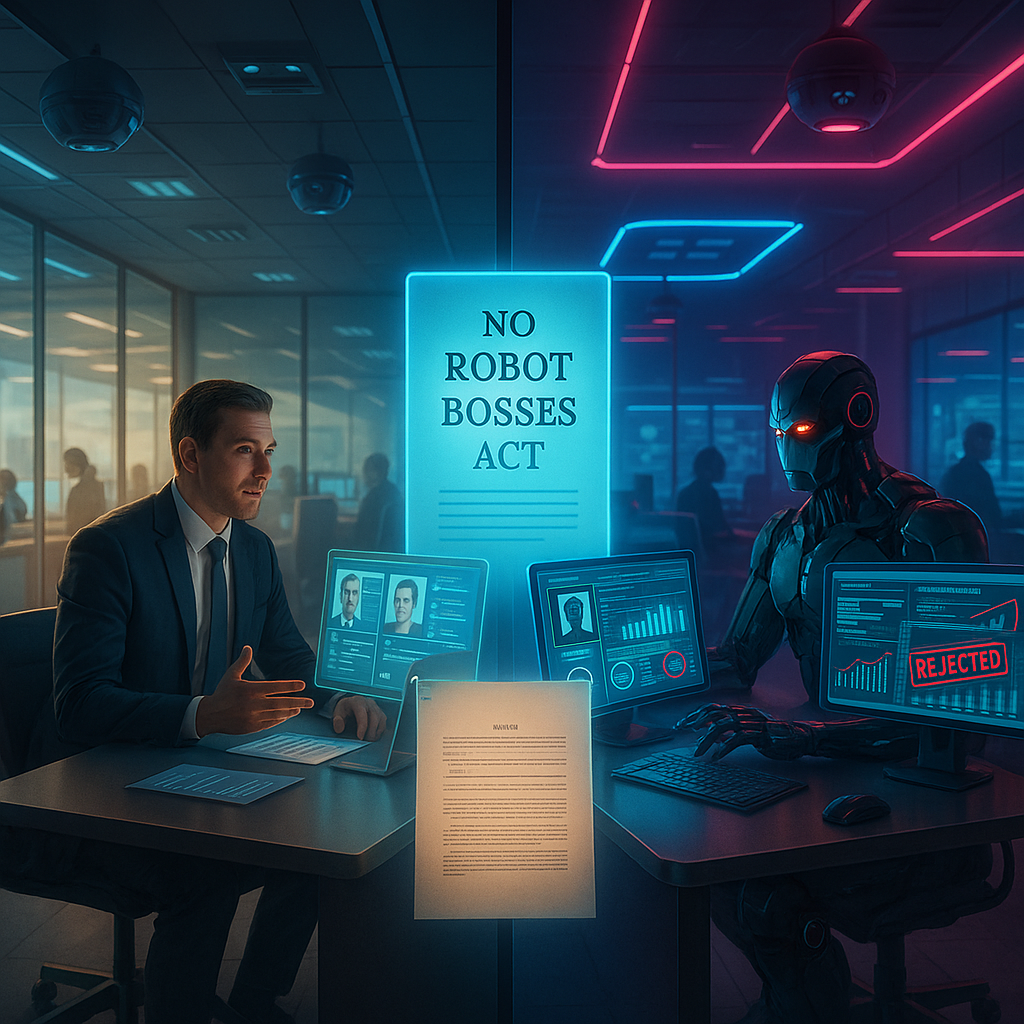

A bipartisan coalition of U.S. lawmakers introduced the “No Robot Bosses Act” on Tuesday, aiming to ensure that human oversight remains central in employment decisions as artificial intelligence becomes more integrated into the workplace. The proposed legislation would limit fully automated AI participation in hiring and firing, citing concerns about algorithmic bias and the erosion of human agency at work.

The ‘No Robot Bosses Act’: What Lawmakers Propose

The “No Robot Bosses Act” seeks to define clear limitations for artificial intelligence in the workplace. The bill prohibits employers from relying entirely on AI systems to make hiring, firing, or promotion decisions, mandating that meaningful human oversight be maintained.

Under this legislation, companies would need to ensure a “human in the loop” for all significant employment decisions. A qualified human representative must review and approve any algorithmic recommendations that affect employees’ careers.

Transparency is also a focus. Employers would be required to notify job candidates and employees whenever AI tools are used in evaluation processes and clarify how such systems influence decisions.

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

Representative Anna Eshoo (D-CA), a co-sponsor of the bill, stated that the goal is not to halt innovation, but to ensure technology serves human values in the workplace. Eshoo emphasized that workers should be assessed by humans who appreciate their unique qualities, not just by algorithms programmed for narrow objectives.

The Philosophy Behind Human Oversight

Beneath the legislative details lies a philosophical conversation about agency and accountability in an automated world. The bill challenges the belief that algorithmic decisions are automatically more objective or efficient than those made by people.

Dr. Meredith Whittaker, president of the Signal Foundation and an AI ethics researcher, explained that AI systems lack the moral intuition and contextual awareness humans bring to complex decisions. AI, she said, is trained on historical data that can embed and amplify past biases.

The bill’s call for “meaningful oversight” acknowledges this gap. AI processes vast amounts of data efficiently, but humans contribute emotional intelligence, ethical judgment, and cultural understanding to workplace decisions.

Senator Mark Warner (D-VA) underscored this distinction. He stated that algorithms may seek efficiency, but they cannot appreciate the deeper human potential of workers. Some decisions, he argued, are simply too consequential to leave to systems that cannot grasp the full scope of humanity.

Real-World Impacts of Algorithmic Management

The risks of relying on AI-driven workplace decisions have become increasingly evident. A 2022 Harvard Business School study found that automated resume-screening tools frequently rejected qualified candidates for minor resume gaps or unconventional career paths that human reviewers would have recognized as assets.

In a notable example, Amazon discontinued an AI recruiting tool in 2018 upon discovering it systematically discriminated against women. The tool, trained on data from a predominantly male workforce, had learned to favor male applicants.

AI-powered productivity monitoring in warehouses and gig economy jobs has also caused concern. Research published in the Journal of Business Ethics reports that such systems have led to higher stress, increased injuries, and more impersonal working conditions.

Maria Rodriguez, a warehouse worker who spoke at congressional hearings, described being flagged by AI systems for decreased productivity due to back pain. She noted that such systems have no understanding of human physical limitations or health.

Industry Response and Implementation Challenges

The technology industry’s response to the bill is divided. The Information Technology Industry Council, representing leading tech firms, voiced concerns about innovation barriers, but agreed on the need for responsible AI deployment.

Jason Oxman, ITI President and CEO, said the industry shares the goal of ensuring responsible AI use in employment. He warned that regulation should avoid unintended consequences that might stifle beneficial technologies.

Implementation details present ongoing challenges, especially around defining “meaningful human oversight.” Critics question whether human reviewers genuinely exercise independent judgment when presented with algorithmic recommendations.

Dr. Alex Hanna, Director of Research at the Distributed AI Research Institute, pointed out that simply having a person approve AI decisions does not fully address the issue. Research indicates that people often defer to algorithmic suggestions even when they disagree. This tendency is known as automation bias.

Broader Implications for AI Governance

The “No Robot Bosses Act” reflects broader efforts to set boundaries for artificial intelligence in high-stakes areas. It appears alongside the European Union’s AI Act, which designates AI systems used in employment as “high-risk” and subjects them to greater scrutiny.

The legislation’s approach to workplace decisions could influence governance in other sectors. Legal scholars suggest that principles of human oversight, transparency, and accountability could help shape how AI is managed in healthcare, criminal justice, education, and more.

Professor Frank Pasquale of Brooklyn Law School stated that the legislation establishes an important precedent. Technology, he argues, should enhance human decision-making rather than replace it, particularly where fundamental human interests are at stake.

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

This debate connects with wider philosophical questions about the future of work as intelligent machines proliferate. By explicitly preserving human control in employment contexts, lawmakers are reaffirming that work is both a social relationship and not merely an economic transaction ripe for full automation.

What Happens Next

The bill will proceed through committee hearings in both chambers of Congress. Lawmakers intend to gather input from labor representatives, AI developers, and employment law experts to fine-tune the proposed rules.

A central challenge remains the enforcement of these provisions. Current drafts would authorize the Equal Employment Opportunity Commission and the Department of Labor to investigate violations and establish compliance guidelines.

In the meantime, some companies are acting ahead of legislation. Salesforce, for example, recently adopted a “Human-Centered AI” policy that requires all automated employment recommendations to undergo substantial human review before being implemented.

The bill’s future depends in part on whether bipartisan cooperation can be sustained. Although protecting worker dignity appeals across party lines, debates continue around the scope and implementation of regulation for emerging technologies.

Conclusion

The “No Robot Bosses Act” marks a crucial moment in clarifying the boundaries between automation and human leadership in the workplace. It reflects society’s ongoing conversation with technological change. The law’s outcome may set the direction for AI governance across multiple sensitive domains. What to watch: Upcoming Congressional hearings and expert testimony will shape the bill’s future, testing both the strength of bipartisan support and the clarity of enforcement measures.

Leave a Reply