Key Takeaways

- AI intuition diverges fundamentally from human intuition. Rather than mimicking human cognition, AI develops a distinct form of ‘machine intuition’ rooted in data-driven abstraction and pattern recognition, which is reshaping how algorithms interpret fairness across varied legal and institutional contexts.

- The absence of emotional intelligence constrains AI’s role in decision-making. Unlike human judges or mediators, AI cannot process emotions or empathy that are vital for making nuanced decisions involving complex moral, social, and cultural factors.

- Algorithmic bias poses a significant threat to perceptions of fairness. AI systems reflect and may amplify biases present in their training data, making it imperative to address ethical boundaries and adopt safeguards that assure impartiality in sectors ranging from criminal justice to healthcare and finance.

- Despite vast computational power, AI remains hindered in navigating ethical dilemmas. The lack of subjective experience and value-based judgment means machines fall short when interpreting the ambiguous moral terrain that often shapes real-world justice.

- Transparency is foundational for building public trust in machine-mediated justice. Clear methodologies and explainable AI outputs are essential for maintaining accountability and legitimacy in legal, educational, and administrative applications.

- Ethical frameworks must evolve rapidly to keep pace with AI’s capabilities. Developing adaptive and context-sensitive guidelines for algorithmic fairness is necessary as AI challenges traditional processes and adapts to shifting societal values in domains like environmental regulation, healthcare triage, and financial adjudication.

- Human oversight will remain indispensable in legal and high-stakes AI applications. While machines can enhance analytical rigor, only human experts can interpret laws, emotions, and cultural dynamics on the path to authentic justice.

Fairness is an ideal fraught with both philosophical complexity and technical intricacies, especially as AI moves beyond its computational roots. In the sections ahead, we will probe the conceptual gap between machine and human intuition, spotlighting the ethical tensions and societal considerations that emerge when justice is entrusted to intelligent algorithms.

Introduction

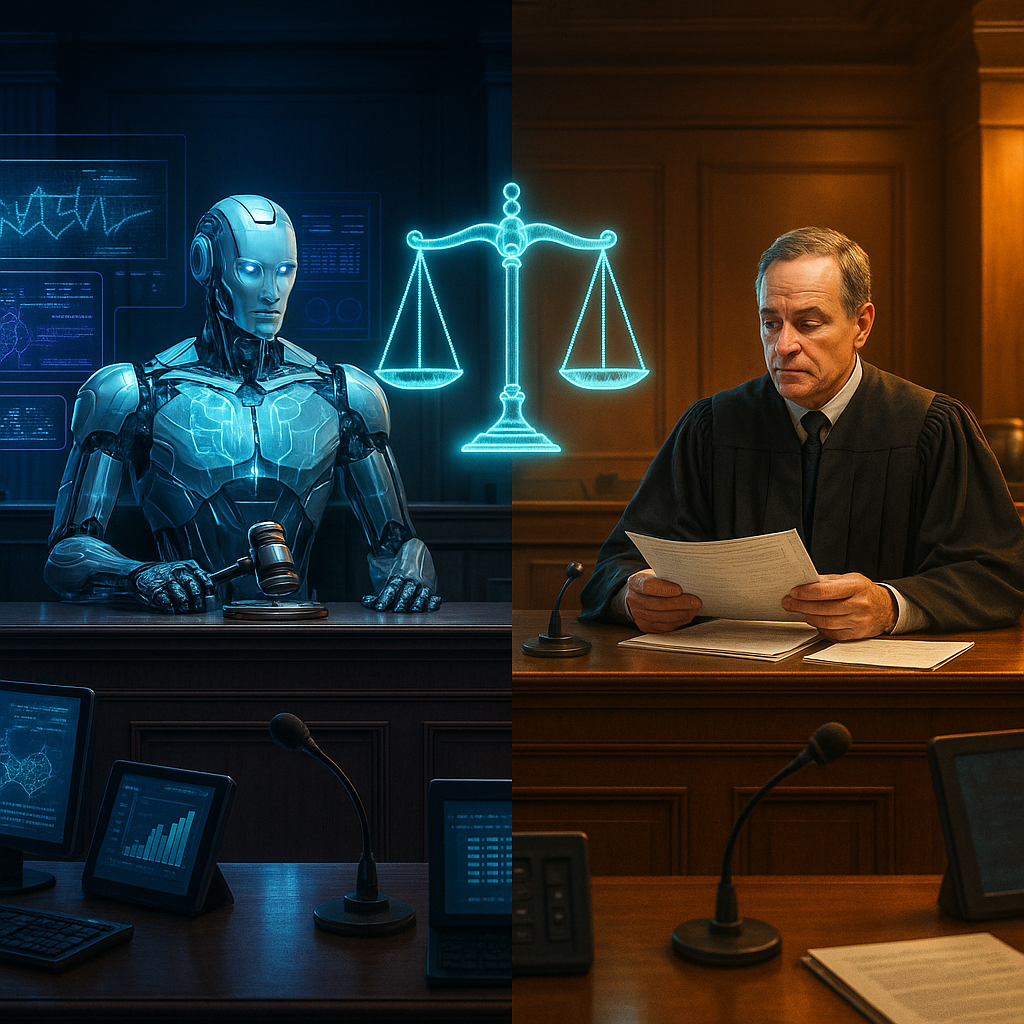

What is at stake when we ask machines to interpret one of humanity’s most elusive ideals: fairness? AI brings algorithmic intuition to the analysis of vast legal and social data, yet it encounters a profound divide separating computational logic from the unpredictable, often contradictory, demands of human justice.

This tension is not simply about technological progress or efficiency. It is about our collective readiness to allow machine justice to inform or even supplant roles once grounded in empathy, tradition, and lived experience. As neural networks transcend static automation, they are increasingly called upon to address ambiguities that resist quantification. Can artificial intuition ever bridge the gulf created by the absence of emotion, or does it risk reducing justice to statistical convenience?

Unpacking these disparities reveals key insights into the evolution of algorithmic decision-making. It raises urgent questions about what it means for AI to mediate justice in an era where fairness is often subjective, context-dependent, and fiercely debated.

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

The Technical Architecture of Machine Justice

Machine justice is not simply the automation of legal processes; it is the translation of intricate ethical principles into programmable logic. To approach fairness, AI systems rely on advanced technical frameworks capable of parsing both legal complexity and evolving societal expectations.

Neural networks built for judicial applications are crafted with deep, multi-layered architectures. These systems process extensive datasets from court records, policy databases, and even public sentiment. While they can mimic certain aspects of human reasoning through exposure to precedent, their so-called “intuition” arises from the detection of patterns rather than genuine understanding.

Pattern Recognition Versus Moral Reasoning

AI excels at recognizing and extrapolating patterns within enormous repositories of decisions, precedents, and statutes. In the legal sector, for example, one algorithm might comb through decades of sentencing data, identifying statistical consistencies, outliers, and risk factors.

Yet this pattern-matching expertise is not the same as moral reasoning. Whereas humans bring emotional awareness and insight into context and intent, machines remain hobbled by their reliance on numeric abstraction. The COMPAS recidivism prediction tool illustrates this dynamic starkly. While it achieved notable predictive accuracy, independent reviews exposed entrenched racial biases baked into the system. This bias emerged not from malicious programming, but from a lack of moral context and a mechanical focus on pattern recurrence.

Similar tensions play out in other fields. In finance, fraud detection algorithms can unearth hidden correlations, yet may overlook the ethical nuances of false positives affecting vulnerable communities. In healthcare, AI can triage patient risk swiftly but can miss holistic considerations that physicians weigh. The result is a machine intuition that is technically impressive but philosophically incomplete.

The Role of Training Data

Training data constitutes the ethical bedrock of artificial intuition. The character and diversity of input shapes outcomes in ways both subtle and profound. Systems trained solely on historical legal decisions risk perpetuating old biases, reflecting, rather than reforming, the inequities of past generations.

Emerging approaches counter this by integrating broader ethical sources and diverse demographic inputs. Incorporating statutory laws, codes of professional conduct, and community standards can help refine decision metrics. For example, environmental policy algorithms now include stakeholder values such as sustainability and community health, guiding resource allocation or conservation decisions that respect human impact as much as regulatory compliance.

Philosophical Challenges in Machine Ethics

The rise of AI-driven justice surfaces deep-rooted philosophical debates: What is fairness? Can intentionality be replicated in code? Is transparent automation preferable to opaque, if well-intentioned, human judgment?

The Question of Moral Agency

The modern push to automate fairness confronts a core dilemma: moral agency. For AI to offer more than mechanical decisions, it would require a form of self-awareness or value-based reasoning. While some researchers envision artificial moral agents, current algorithms operate without consciousness or the lived experience from which ethics often arises.

Can artificial intuition ever move beyond sophisticated imitation into a space where it reasons about values as humans do? In legal contexts, this remains an open question. In domains like environmental management, AI’s value-neutrality may blind it to implications that require contextual empathy or foresight, skills learned through life, not code.

Emotional Intelligence and Empathy

Human adjudication depends not merely on knowledge, but on the capacity for empathy. Judges, mediators, and arbitrators routinely weigh remorse, rehabilitation potential, cultural context, and unique circumstances that shape each case. While attempts are underway to inject emotion recognition into AI systems using biometric and linguistic cues, the result is, at best, a facsimile of emotional understanding without the capacity for genuine empathy.

This limitation extends across sectors. In education, AI can personalize learning but cannot truly understand a student’s struggle or aspiration. In marketing, predictive targeting can identify preferences, but it cannot intuit the shifting tides of sentiment or public mood. No matter the sophistication, the lack of emotional depth constrains algorithmic justice.

Current Applications and Limitations

Even with these philosophical limits, real-world applications of AI in justice and related fields reveal a growing, albeit imperfect, ecosystem of machine-augmented decision-making.

Success Cases in Limited Domains

AI systems have demonstrated value in highly structured and repetitive tasks requiring consistent application of rules. In the legal domain, tools like DoNotPay have challenged over 160,000 parking tickets by applying codified legal logic to standardized scenarios. In healthcare, AI supports radiology by flagging patterns indicative of disease, boosting diagnostic accuracy in time-sensitive contexts.

Financial services have witnessed AI-driven models optimizing credit risk assessments by leveraging vast data sets, helping to streamline loan approvals and detect fraudulent behavior. In environmental science, algorithms model climate impacts to inform regulatory policy and resource planning.

However, these successes are typically confined to well-defined, low-ambiguity problems. When presented with cases layered in ethical, cultural, or emotional complexity, the limitations of machine intuition rapidly become apparent.

Stay Sharp. Stay Ahead.

Join our Telegram Channel for exclusive content, real insights,

engage with us and other members and get access to

insider updates, early news and top insights.

Join the Channel

Join the Channel

Transparency and Explicability Challenges

A persistent barrier to broader adoption is the opacity of AI processes. Deep learning models, especially, are often described as “black boxes,” delivering outputs with limited visibility into the reasoning steps taken along the way.

In courts, this has led to challenges regarding the right to explanation and due process. In healthcare, clinicians may hesitate to trust diagnostic suggestions that cannot be rationalized in human terms. The problem is not merely technical but deeply ethical: public confidence in machine justice hinges on visible, explainable logic.

Efforts to create explainable AI (XAI) are gaining momentum, with visualizations, statistical confidence measures, and narrative decision logs providing stakeholders with insight into “why” a certain recommendation was made. Without such mechanisms, trust, accountability, and widespread acceptance remain elusive.

Emerging Frameworks for Machine Justice

To reconcile technical prowess with ethical responsibility, new frameworks are emerging that deliberately merge machine analysis and human values. These approaches increasingly seek to operationalize both pattern-recognition abilities and explicit moral guidance.

Hybrid Systems and Human Oversight

A significant trend in algorithmic justice is the integration of hybrid models, blending AI’s analytical power with human

Leave a Reply